| |

|

| |

| |

| |

|

Our selection of the top business news sources on the web. |

| |

| |

| |

Quote: Sergey Brin, Google Co-founder“We simply do not know what the limit to intelligence is. There's no law that says, 'Can you be 100 times smarter than Einstein? Can you be a billion times smarter? Can you be a Google times smarter?' I think we have just no idea what the laws governing that are.” - Sergey Brin, Google Co-founderThe quote is from Sergey Brin, Google Co-founder in an interview with CatGPT. The interview took place immediately after Google IO 2025. Sergey Brin, born on August 21, 1973, in Moscow, Russia, is a renowned computer scientist and entrepreneur best known for co-founding Google alongside Larry Page. His journey from a young immigrant to a tech visionary has significantly influenced the digital landscape. Early Life and Education In 1979, at the age of six, Brin's family emigrated from the Soviet Union to the United States, seeking greater opportunities and freedom. They settled in Maryland, where Brin developed an early interest in mathematics and computer science, inspired by his father, a mathematics professor. He pursued his undergraduate studies at the University of Maryland, earning a Bachelor of Science in Computer Science and Mathematics in 1993. Brin then continued his education at Stanford University, where he met Larry Page, setting the stage for their future collaboration. The Genesis of Google While at Stanford, Brin and Page recognized the limitations of existing search engines, which ranked results based on the number of times a search term appeared on a page. They developed the PageRank algorithm, which assessed the importance of web pages based on the number and quality of links to them. This innovative approach led to the creation of Google in 1998, a name derived from "googol," reflecting their mission to organize vast amounts of information. Google's rapid growth revolutionized the way people accessed information online. Leadership at Google As Google's President of Technology, Brin played a pivotal role in the company's expansion and technological advancements. Under his leadership, Google introduced a range of products and services, including Gmail, Google Maps, and Android. In 2015, Google underwent a significant restructuring, becoming a subsidiary of Alphabet Inc., with Brin serving as its president. He stepped down from this role in December 2019 but remained involved as a board member and controlling shareholder. Advancements in Artificial Intelligence In May 2025, during the Google I/O conference, Brin participated in an interview where he discussed the rapid advancements in artificial intelligence (AI). He highlighted the unpredictability of AI's potential, stating, "We simply do not know what the limit to intelligence is. There's no law that says, 'Can you be 100 times smarter than Einstein? Can you be a billion times smarter? Can you be a Google times smarter?' I think we have just no idea what the laws governing that are." At the same event, Google unveiled significant updates to its Gemini AI models. The Gemini 2.5 Pro model introduced the "Deep Think" mode, enhancing the AI's ability to tackle complex tasks, including advanced reasoning and coding. Additionally, the Gemini 2.5 Flash model became the default, offering faster response times. These developments underscore Google's commitment to integrating advanced AI technologies into its services, aiming to provide users with more intuitive and efficient experiences. Personal Life and Legacy Beyond his professional achievements, Brin has been involved in various philanthropic endeavors, particularly in supporting research for Parkinson's disease, a condition affecting his mother. His personal and professional journey continues to inspire innovation and exploration in the tech industry. Brin's insights into the future of AI reflect a broader industry perspective on the transformative potential of artificial intelligence. His contributions have not only shaped Google's trajectory but have also had a lasting impact on the technological landscape.

|

| |

| |

Quote: Satya Nadella, Chairman and CEO of Microsoft“I think we as a society celebrate tech companies far too much versus the impact of technology... I just want to get to a place where we are talking about the technology being used and when the rest of the industry across the globe is being celebrated because they use technology to do something magical for all of us, that would be the day.” - Satya Nadella, the Chairman and CEO of MicrosoftThe quote is from Satya Nadella, Microsoft CEO in an interview with Rowan Cheung. The interview took place immediately after Microsoft Build 2025. Satya Nadella, the Chairman and CEO of Microsoft, has been at the helm of the company since 2014, steering it through significant technological transformations. Under his leadership, Microsoft has embraced cloud computing, artificial intelligence (AI), and a more open-source approach, solidifying its position as a leader in the tech industry. The quote in question was delivered during an interview with Rowan Cheung immediately following the Microsoft Build 2025 conference. Microsoft Build is an annual event that showcases the company's latest innovations and developments, particularly in the realms of software development and cloud computing. Microsoft Build 2025: Key Announcements and Context At Microsoft Build 2025, held in Seattle, Microsoft underscored its deep commitment to artificial intelligence, with CEO Satya Nadella leading the event with a keynote emphasizing AI integration across Microsoft platforms. A significant highlight was the expansion of Copilot AI in Windows 11 and Microsoft 365, introducing features like autonomous agents and semantic search. Microsoft also showcased new Surface devices and introduced its own AI models to reduce reliance on OpenAI. In a strategic move, Microsoft announced it would host Elon Musk's xAI model, Grok, on its cloud platform, adding Grok 3 and Grok 3 mini to the portfolio of third-party AI models available through Microsoft’s cloud services. Additionally, Microsoft introduced NLWeb, an open project aimed at simplifying the development of AI-powered natural language web interfaces, and emphasized a vision of an “open agentic web,” where AI agents can perform tasks and make decisions for users and organizations. These announcements reflect Microsoft's strategic focus on AI and its commitment to providing developers with the tools and platforms necessary to build innovative, AI-driven applications.

|

| |

| |

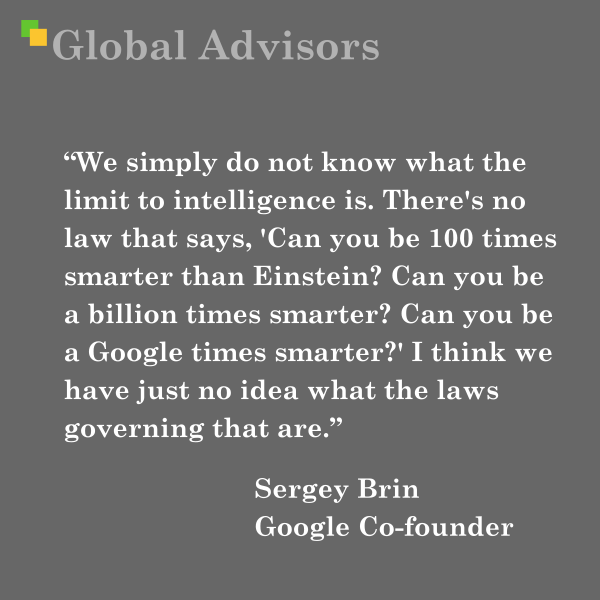

Infographic: Four critical DeepSeek enablersThe DeepSeek team has introduced several high-impact changes to Large Language Model (LLM) architecture to enhance performance and efficiency:

The Impact of Open-Source Models:DeepSeek's success highlights a fundamental shift in AI development. Traditionally, leading-edge models have been closed-source and controlled by Western AI firms like OpenAI, Google, and Anthropic. However, DeepSeek's approach, leveraging open-source components while innovating on training efficiency, has disrupted this dynamic. Pelliccione notes that DeepSeek now offers similar performance to OpenAI at just 5% of the cost, making high-quality AI more accessible. This shift pressures proprietary AI companies to rethink their business models and embrace greater openness. Challenges and Innovations in the Chinese AI Ecosystem:China's AI sector faces major constraints, particularly in access to high-performance GPUs due to U.S. export restrictions. Yet, Chinese companies like DeepSeek have turned these challenges into strengths through aggressive efficiency improvements. MLA and FP8 precision optimizations exemplify how innovation can offset hardware limitations. Furthermore, Chinese AI firms, historically focused on scaling existing tech, are now contributing to fundamental advancements in AI research, signaling a shift towards deeper innovation. The Future of AI Control and Adaptation:DeepSeek-R1’s approach to training AI reasoners poses a challenge to traditional AI control mechanisms. Since reasoning capabilities can now be transferred to any capable model with fewer than a million curated samples, AI governance must extend beyond compute resources and focus on securing datasets, training methodologies, and deployment platforms. OpenAI has previously obscured Chain of Thought traces to prevent leakage, but DeepSeek’s open-weight release and published RL techniques have made such restrictions ineffective. Broader Industry Context:

These innovations collectively contribute to more efficient and effective LLMs, balancing performance with resource utilization while shaping the future of AI model development. Sources: Global Advisors, Jack Clark - Anthropic, Antoine Blondeau, Alberto Pelliccione, infoq.com, medium.com, en.wikipedia.org, arxiv.org |

| |

| |

PODCAST: Effective Transfer PricingOur Spotify podcast discusses how to get transfer pricing right. We discuss effective transfer pricing within organizations, highlighting the prevalent challenges and proposing solutions. The core issue is that poorly implemented internal pricing leads to suboptimal economic decisions, resource allocation problems, and interdepartmental conflict. The hosts advocate for market-based pricing over cost recovery, emphasizing the importance of clear price signals for efficient resource allocation and accurate decision-making. They stress the need for service level agreements, fair cost allocation, and a comprehensive process to manage the political and emotional aspects of internal pricing, ultimately aiming for improved organizational performance and profitability. The podcast includes case studies illustrating successful implementations and the authors' expertise in this field. Read more from the original article.

|

| |

| |

PODCAST: A strategic take on cost-volume-profit analysisOur Spotify podcast highlights that despite familiarity, most managers do not apply CVP analysis and get it wrong in its most basic form. The hosts explain cost-volume-profit (CVP) analysis, a crucial business tool often misapplied. It details the theoretical underpinnings of CVP, using graphs to illustrate relationships between price, volume, and profit. The hosts highlight common errors in CVP application, such as neglecting volume changes after price increases, leading to the "margin-price-volume death spiral." The hosts offer practical advice and strategic questions to improve CVP analysis and decision-making, emphasizing the need for accurate costing and a nuanced understanding of market dynamics. Finally, the podcast provides case studies illustrating both successful and unsuccessful CVP implementations. Read more from the original article.

|

| |

| |

Quote: Dario Amodei"If we want AI to favor democracy and individual rights, we are going to have to fight for that outcome." Dario Amodei

|

| |

| |

Quote: Dario Amodei"It’s my guess that powerful AI could at least 10x the rate of these discoveries, giving us the next 50-100 years of biological progress in 5-10 years." Dario Amodei Gimg src="https://globaladvisors.biz/wp-content/uploads/2024/11/20241120_13h00_GlobalAdvisors_Marketing_Quote_DarioAmodei_MW.png"/> |

| |

| |

Quote: Dario Amodei“I think that most people are underestimating just how radical the upside of AI could be, just as I think most people are underestimating how bad the risks could be.” - Dario Amodei

|

| |

| |

Quote: Sam Altman“Build a company that benefits from the model getting better and better ... I encourage people to be aligned with that.” - Sam Altman

|

| |

| |

PODCAST: Your Due Diligence is Most Likely WrongOur Spotify podcast explores why most mergers and acquisitions fail to create value and provides a practical guide to performing a strategic due diligence process. The hosts The hosts highlight common pitfalls like overpaying for acquisitions, failing to understand the true value of a deal, and neglecting to account for future uncertainties. They emphasize that a successful deal depends on a clear strategic rationale, a thorough understanding of the target's competitive position, and a comprehensive assessment of potential risks. They then present a four-stage approach to strategic due diligence that incorporates scenario planning and probabilistic simulations to quantify uncertainty and guide decision-making. Finally, they discuss how to navigate deal-making during economic downturns and stress the importance of securing existing businesses, revisiting return measures, prioritizing potential targets, and factoring in potential delays. Read more from the original article.

|

| |

| |

|

| |

| |